From the Top: How Technology Executives View Generative AI’s Role in Cybersecurity

In the ever-changing realm of digital security, the integration and impact of Generative Artificial Intelligence (GenAI) are pivotal topics for global organizations. Our technology experts have embarked on an in-depth analysis to explore the multifaceted role of GenAI in cybersecurity. This investigation delves into the evolving tactics of cyber criminals empowered by GenAI, examines how GenAI can bolster security operations teams, assesses the challenges in mitigating GenAI-driven threats, and discusses the extent of regulation needed to safeguard digital assets. Drawing insights from our survey among leading technology executives, this report aims to illuminate the complexities and emerging strategies defining the intersection of GenAI and cybersecurity.

Methodology and Participant Profile

The survey was carried out among a broad range of councils, including Pittsburgh, Tennessee, Austin, Florida, Philadelphia, Pittsburgh, Minneapolis, Atlanta, Michigan, Houston, the St. Louis/Kansas City area, North Carolina, Ohio, Denver, Salt Lake City, New York City, London, Dublin, Vancouver, Toronto, San Francisco, Sydney, and broader areas of Europe. This extensive engagement aims to reflect a comprehensive spectrum of viewpoints and insights, thereby providing an all-encompassing picture of the impact of Gen AI on cybersecurity across the globe.

Purpose of the Survey

The purpose of this article is to understand the perspectives and strategic insights of technology executives regarding the integration and implications of Generative AI (GenAI) in cybersecurity. By engaging with leaders across a diverse set of regions and industries, we aim to gather a rich array of viewpoints on several critical areas:

- Assessment of Threats: Understanding how technology executives perceive the risk of GenAI-enabled cyber threats. This involves evaluating their concerns regarding the evolving tactics of threat actors who may utilize GenAI for malicious purposes such as phishing, social engineering, and advanced persistent threats.

- Strategic Advantages: Exploring how these leaders envision using GenAI to enhance their cybersecurity measures. This includes identifying the most beneficial applications of GenAI in defending against and mitigating cyber threats, from automated threat detection systems to advanced anomaly analysis.

- Challenges and Mitigation Strategies: Investigate organizations’ specific challenges in countering GenAI-driven cyber threats and implementing strategies to mitigate these risks. This also involves understanding the measures taken to ensure the ethical use of GenAI within their operations.

- Regulatory Perspective: Gauging the opinions of technology executives on the extent of regulation needed to manage the risks associated with GenAI in cybersecurity. This will provide insights into the balance between fostering innovation and ensuring security and ethical compliance in using AI technologies.

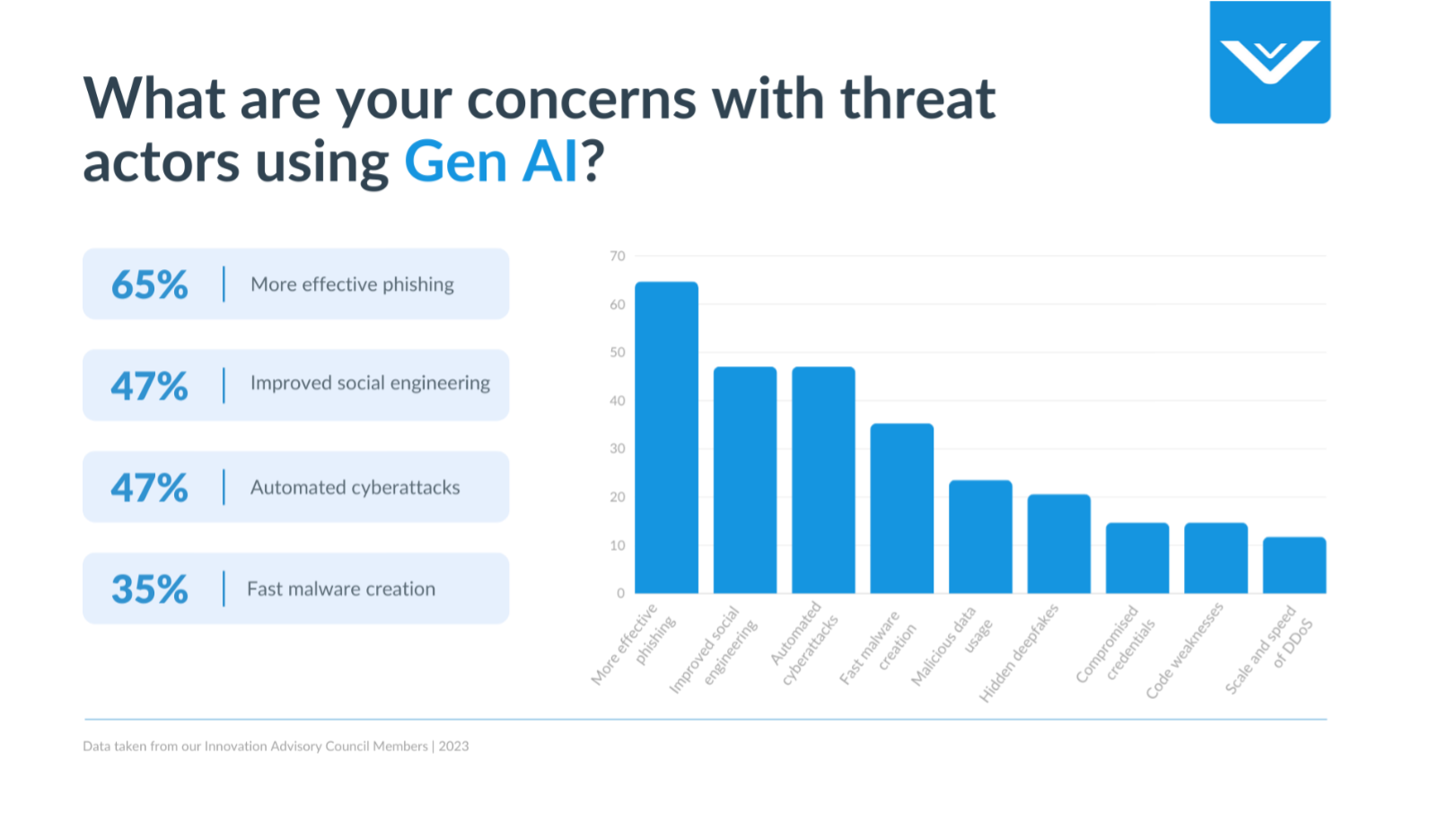

Concerns with Threat Actors Using Gen AI

Enhancing Phishing and Social Engineering with Generative AI

The advent of generative AI models has opened up new avenues for malicious actors to carry out sophisticated phishing and social engineering attacks. These AI systems can generate highly realistic and contextually relevant content, including emails, text messages, and even audio or video clips, making it easier to impersonate legitimate individuals or organizations. By leveraging generative AI, threat actors can craft highly personalized and convincing lures tailored to specific targets, increasing the likelihood of successful deception. Additionally, AI-generated content can aid in creating fake social media profiles, websites, and other digital assets, lending an air of credibility to these social engineering campaigns. One of the most pressing challenges organizations face today is phishing and social engineering attacks. These have commonly led to compromised credentials and account compromise. With the introduction of generative AI, these threat actors can quickly and more effectively create campaigns to accurately manipulate human psychology to deceive people.

Generative AI in Reconnaissance

Generative AI has the potential to significantly aid threat actors in the critical reconnaissance phase of planning phishing and social engineering campaigns. Malicious actors can generate highly detailed personal and organizational profiles by training AI models on publicly available data sources like social media profiles, company websites, and online databases. This AI-generated information can reveal valuable insights into potential targets’ interests, relationships, job roles, and online behaviors - intelligence that can be leveraged to craft ultra-personalized phishing lures and pretexts. Additionally, AI models could map out organizational hierarchies, reporting structures, and communication patterns, identifying key individuals to target and opportune ingress points. Automated reconnaissance through AI could allow threat groups to rapidly accumulate a rich pool of open-source intelligence on a large scale, streamlining the labor-intensive reconnaissance process. As generative AI capabilities advance, defending against AI-powered reconnaissance may necessitate revisiting data privacy practices and open-source intelligence exposure.

Leveraging AI for Enhanced Social Engineering

For creating lures and hooks, generative AI allows threat actors to generate highly realistic and contextually relevant content like emails, messages, multimedia and even deepfake videos - making impersonations and pretext scenarios far more believable and challenging to distinguish from legitimate communications. AI-generated content can also craft convincing fake personas across social media, websites and other digital assets to lend credibility to social engineering campaigns. During interactions to play the hook, conversational AI capabilities could dynamically tailor dialogs based on targets’ responses to maximize psychological manipulation.

In the exploit phase, AI writing assists in producing tailored, targeted lures and instructions to covertly guide victims into revealing sensitive data or performing malicious actions. After initial compromise, generative AI could help automate aspects like crafting plausible cover stories, producing fake content to maintain personas, and even generating code for further exploits. As defensive tools struggle to detect AI-generated artifacts reliably, generative AI gives attackers powerful advantages for conducting sophisticated, evolving social engineering campaigns from initial pretext through exploitation and beyond.

Generative AI and the Rise of Automated Cyberattacks

Threat actors are increasingly leveraging the capabilities of large language models (LLMs) to automate and enhance their cyberattack strategies, exploiting the rapid advancements and widespread availability of artificial intelligence. The integration of LLMs into the cyber threat landscape has been noted for its role in augmenting the efficiency and complexity of attacks, allowing criminals to perform sophisticated tasks with incredible speed and scale. For instance, LLMs are used for crafting more convincing phishing messages, automating the generation of malicious code, and conducting in-depth surveillance on potential targets. This misuse includes attempts to probe systems for vulnerabilities, tailor social engineering attacks, and even automate specific hacking tasks that previously required significant human labor. As such, cybercriminals misuse AI tools like LLMs, which represents an important shift towards more automated, versatile, and stealthy cyber operations, posing heightened challenges for cybersecurity defenses.

Autonomous LLM Agents for Hacking

Recent research has highlighted the potential for generative AI, specifically large language models (LLMs), to hack websites, raising serious cybersecurity concerns autonomously. These LLM agents have demonstrated the ability to perform complex attacks like blind SQL union attacks, which involve navigating websites and executing multiple steps to exploit vulnerabilities. Such capabilities are particularly pronounced in advanced models like GPT-4, which has successfully hacked many test websites by leveraging specialized techniques and extensive cybersecurity knowledge embedded within the model. This development underscores the dual-use nature of AI technologies, where the same capabilities that support legitimate applications can also be misused for malicious purposes, thus necessitating stringent oversight and ethical considerations in deployment.

LLM for aided scripting and malware development

Threat actors have been utilizing large language models (LLMs) like ChatGPT for scripting and malware development, expanding their toolkit in cyber operations. These LLMs can assist in automating and refining the development of scripts and malware by generating code snippets, troubleshooting errors, and offering solutions to overcome various cybersecurity defenses. For instance, LLMs can create scripts for file manipulation, regular expressions, and even complex multithreading operations, which can be employed in cyberattacks to automate tasks and exploit vulnerabilities. The use of LLMs in this manner again highlights the dual-use nature of AI technologies, which can support both legitimate programming tasks and malicious cyber activities. This advancement necessitates a more sophisticated approach to cybersecurity, emphasizing the need for robust defenses and proactive monitoring systems.

Generative AI’s Role in Rapid Malware Development

The emergence of generative AI technologies has introduced profound advancements across various sectors, including cybersecurity. However, these advancements also usher in significant challenges, particularly in malware development. This section explores two critical aspects where generative AI has begun to reshape the landscape of cyber threats: the creation of undetectable malware and the lowering of technical barriers for producing malicious code.

Undetectable Malware

One of the most alarming capabilities facilitated by generative AI is the development of undetectable malware. Traditional malware detection systems often rely on recognizing patterns or signatures associated with known threats. Generative AI, with its ability to learn and mimic patterns, can create malware variants that evade these traditional detection methods. By continuously generating new and unique malware signatures, AI systems can effectively stay ahead of static detection techniques, posing a significant challenge to cybersecurity defenses.

The use of AI in crafting polymorphic malware, which can alter its code as it spreads, further complicates detection. This ability to change dynamically makes it nearly impossible for signature-based defenses to effectively track and neutralize such threats. Moreover, AI-driven malware can leverage natural language processing to craft compelling phishing emails, increasing the success rate of such cyber attacks.

Lowering the Technical Barrier for Creating Malicious Code

Generative AI enhances the sophistication of malware and democratizes its creation. Previously, crafting effective malware required significant technical skills and a deep understanding of software vulnerabilities. However, AI tools have simplified this process, enabling individuals with minimal technical knowledge to create potent malicious software.

This lowering of barriers is evident with the rise of AI-powered platforms that provide user-friendly interfaces for creating and customizing malware. These platforms often include access to vast databases of vulnerabilities and exploits, which can be combined and configured through simple commands. As a result, the pool of potential cyber attackers has expanded, increasing the overall threat landscape.

Furthermore, integrating AI in development tools can automate parts of the coding process, reducing the time and expertise needed to create complex malicious code. This automation speeds up the malware creation process and enables rapid dissemination across multiple systems and networks, amplifying the potential impact of attacks.

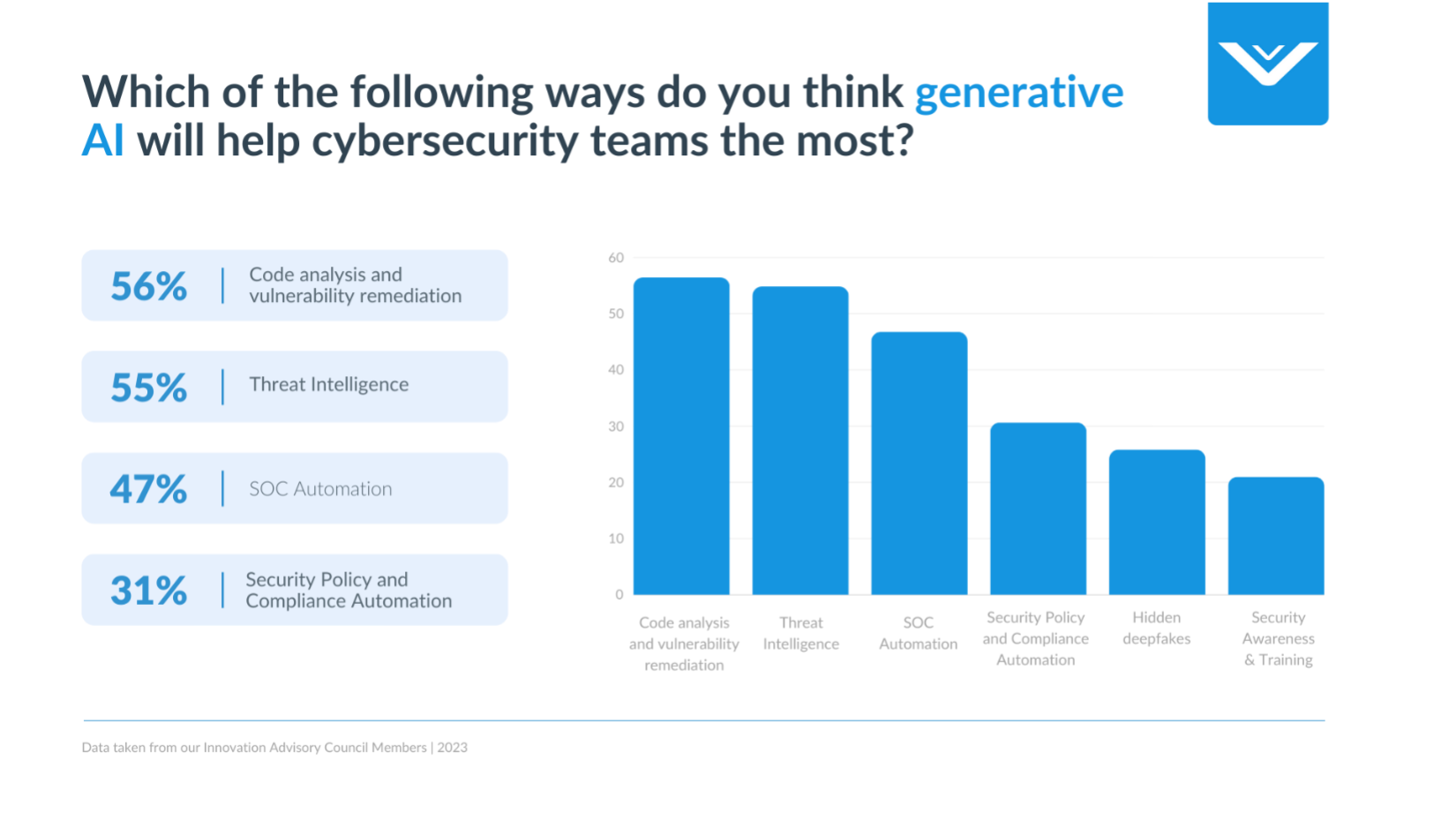

How Generative AI Will Help Cybersecurity Teams the Most

AI-Driven Code Analysis and Vulnerability Detection

As the landscape of application security evolves, the integration of Static Application Security Testing (SAST) and Software Composition Analysis (SCA) tools has led to an overwhelming number of identified vulnerabilities, burdening developers with extensive security backlogs. The shift toward “shift left” security practices has facilitated earlier detection of security issues within the software development lifecycle, yet it falls short in simplifying the remediation process. Here, the application of generative AI emerges as a pivotal solution.

Large Language Models (LLMs) are particularly effective in conducting code analysis and generating code, offering a dual advantage in security applications. These AI-driven systems excel in pinpointing security threats that might evade traditional scanning methods. Additionally, LLMs can propose actionable solutions for security flaws, thereby accelerating remediation efforts and alleviating the workload on developers.

- Automated Code Review: Gen AI, or any advanced AI system, could facilitate automated code reviews by scanning large codebases and identifying potential vulnerabilities. Machine learning algorithms can learn from historical data to recognize patterns associated with security issues, providing a more efficient and accurate way to identify possible weaknesses in the code.

- Behavioral Analysis for Anomaly Detection: Gen AI might utilize behavioral analysis to understand the typical behavior of a system or application. By establishing a baseline of normal behavior, the AI can identify anomalies that may indicate potential security threats. This can be especially helpful in identifying vulnerabilities that may not be apparent through static code analysis alone.

- Intelligent Vulnerability Remediation Suggestions: AI systems can analyze and understand the context of identified vulnerabilities. Gen AI could provide intelligent remediation suggestions by considering factors such as the criticality of the vulnerability, the impact on the system, and the existing codebase. This assistance can significantly speed up addressing vulnerabilities, allowing cybersecurity teams to prioritize and focus on the most critical issues.

How Generative AI Transforms Threat Intelligence

Generative AI is transforming the landscape of cybersecurity by enhancing the capabilities of threat intelligence. Here are two significant ways AI bolsters security protocols: Firstly, by providing in-depth information about the full scope of threats or indicators of compromise (IOCs). This involves sophisticated data analysis to overview potential security threats from various data points. Secondly, AI tools assist in prioritizing active exploits and crafting executive summaries designed explicitly for Chief Information Security Officers (CISOs). These capabilities ensure that high-priority threats are addressed promptly and that crucial decision-makers are equipped with concise, critical insights to guide strategic responses.

Provide information related to the full scope of a threat

Generative AI can significantly enhance threat intelligence by providing comprehensive information about threats or indicators of compromise (IOCs). Using advanced data analysis and pattern recognition capabilities, AI systems can aggregate and analyze data from various sources, including logs, real-time network traffic, and threat databases. This allows security teams to gain a holistic understanding of a threat’s origin, its mechanisms, and potential impacts.

Prioritize active exploits and provide an executive summary to the CISO

Generative AI tools can prioritize threats based on their activity level, potential impact, and exploitability, ensuring that security teams focus their resources where they are most needed. By assessing the severity and immediacy of each threat, AI can help teams not just react but proactively address vulnerabilities. Furthermore, AI can automate the generation of executive summaries tailored for the Chief Information Security Officer (CISO). These summaries include vital points such as the nature of the threat, affected systems, potential business impacts, and recommended actions. This enables CISOs to quickly grasp the situation and make informed decisions about resource allocation, policy changes, and strategic direction.

SOC Automation

It’s a common refrain: security teams are inundated with alerts. The typical workflow for a security analyst involves a laborious, manual process of combing through events and log data using various tools to piece together what happened. When an alert is verified as a true positive, unraveling the full extent of the incident demands even more effort. Major security categories today, including SIEM (security information and event management) and SOAR (security orchestration, automation, and response), are designed to enhance the efficiency of Security Operations Centers (SOC), but challenges remain.

The potential of Large Language Models (LLMs) to transform the SOC analyst’s workflow—from triage to response—is significant. Unlike traditional security automation tools, which depend on manually crafted playbooks and workflows, LLM-enabled systems could autonomously evaluate an alert, chart the necessary investigative steps, execute queries to gather needed data and context, and formulate and propose a response strategy to human operators.

Build Security Automation Runbooks

Large Language Models (LLMs) are poised to revolutionize Security Operations Centers (SOCs) by automating the creation and maintenance of security automation runbooks. A security automation runbook is a documented plan or set of standardized instructions that outlines the procedures and processes to be followed in response to a specific cybersecurity incident or event. Leveraging their capacity to process and understand vast amounts of data, LLMs can dynamically generate and regularly update these runbooks in response to the evolving threat landscape and emerging best practices. This capability significantly reduces the need for manual updates and ensures that the security protocols within the runbooks are consistently aligned with the latest standards. Moreover, LLMs enhance the relevance and effectiveness of automated responses by tailoring them to the specific context of each security alert. Instead of relying solely on pre-determined scripts, these adaptive responses consider various factors such as the type of threat, the systems affected, and the potential impact of the incident. This approach speeds up the response times and ensures that actions are precisely calibrated to mitigate the incident efficiently.

Recommended Remediation

Large Language Models (LLMs) significantly enhance the remediation process in Security Operations Centers (SOCs) by offering actionable insights and supporting collaborative decision-making. During the initial stages of triage and investigation, LLMs analyze the gathered data to develop specific remediation strategies that are prioritized according to the severity and potential impact of the incident. This provides SOC analysts with clear, actionable guidance on effectively addressing and mitigating threats. Beyond merely suggesting remediation actions, LLMs facilitate a collaborative environment where human analysts can engage with the model’s recommendations. This is achieved by presenting simulated outcomes of various remedial actions, allowing analysts to visualize the potential effects of each proposed solution.

Steps Organizations are Taking to Mitigate the Security Risks Posed by AI

Organizations have embarked on addressing the security challenges posed by AI, focusing on implementing robust AI security policies and educating our workforce on AI security best practices.

Implementing AI Security Policies and Procedures

Companies are developing comprehensive policies that govern the use, development, and deployment of AI systems tailored to their specific technologies and use cases. These guidelines cover ethical AI usage, model governance, and risk management frameworks. To ensure the security of data handling, organizations have implemented strict procedures encompassing advanced encryption methods and robust access controls, maintaining data integrity throughout the AI lifecycle. Additionally, their teams conduct regular security assessments and audits of AI systems. This proactive scrutiny helps in the early identification of vulnerabilities and ensures ongoing compliance with evolving security standards.

Training Employees on AI Security Best Practices

In parallel, organizations are committed to educating their employees on AI security through interactive workshops and online courses covering data privacy, model security, and secure coding practices. These training sessions are designed to be accessible to all employees, enhancing their understanding of crucial security measures. Organizations also conduct simulation exercises and tabletop drills to prepare employees to respond effectively to AI-related security incidents. Furthermore, developers, data scientists, and IT personnel receive specialized training to secure AI models and systems throughout their development and operational phases. This includes learning secure software development practices, anomaly detection in AI operations, and advanced defensive techniques against AI-specific threats.

Impact on Risk Mitigation

These measures significantly reduce the risk of unauthorized access to sensitive data, ensuring malicious actors do not compromise AI systems. Regular audits and strict policy adherence ensure compliance with legal and regulatory requirements, mitigating non-compliance risks. Through comprehensive training programs, companies also minimize risks arising from human error, such as misconfigurations or unintentional data leaks. These initiatives equip employees to better recognize and respond to security threats, fostering a proactive approach to security and cultivating a strong culture of security awareness across the organization.

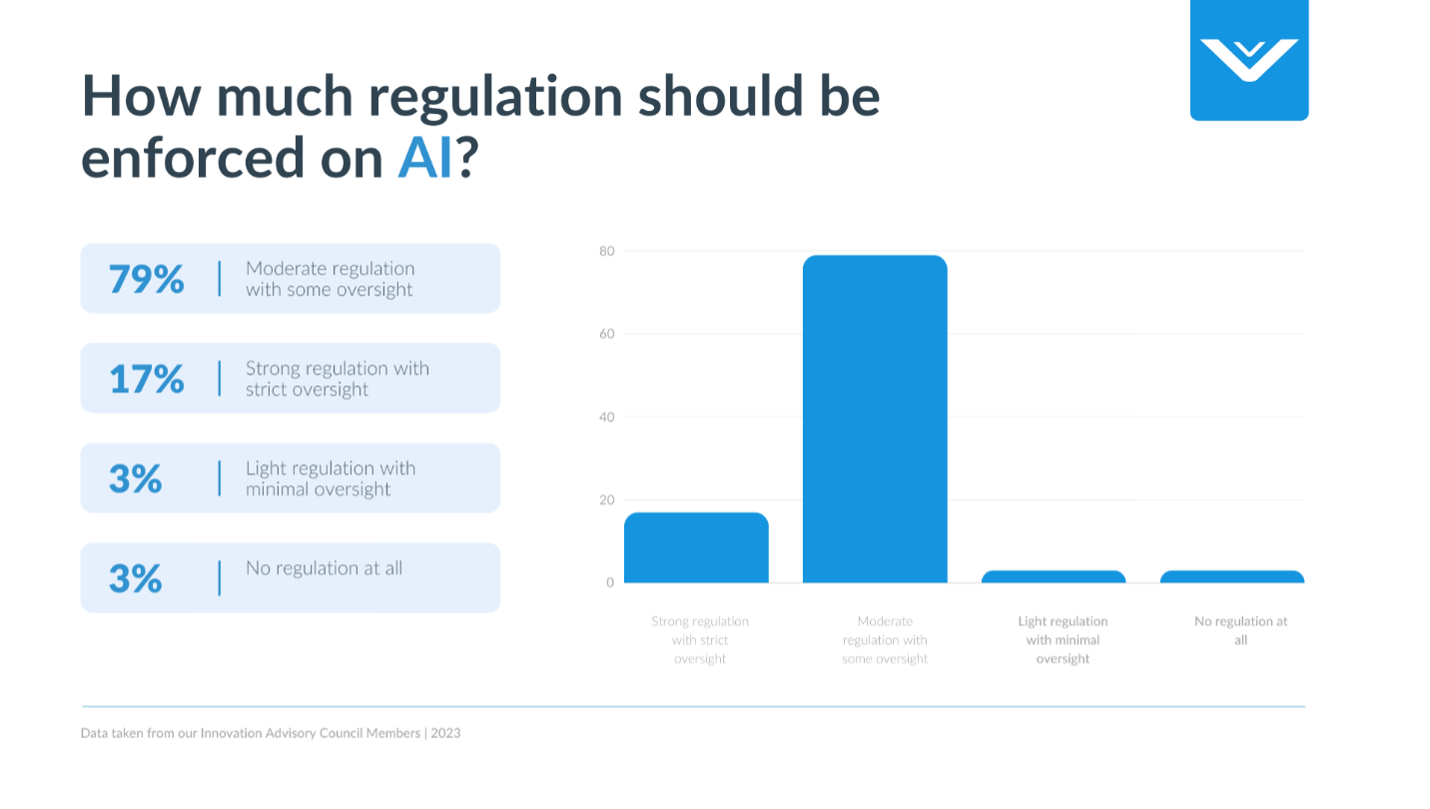

How much regulation should be enforced on AI?

The question of how much regulation should be enforced is contentious. Critics argue that imposing stringent regulations on AI development is too early. They assert that preemptive regulation may be based on speculative harms rather than grounded evidence, which could hinder the technological advancement of countries, limiting their ability to compete on a global scale.

Moreover, there is skepticism about the effectiveness of regulation in the tech industry. Historical examples often cite how regulatory measures in new technologies haven’t always had a proven track record of success. Instead, they have sometimes created barriers to entry, protected obsolete industries, or stifled innovative approaches that deviate from the norm.

Furthermore, proponents of minimal regulation argue that AI, like other transformative technologies, thrives in an environment of innovation that overly prescriptive rules could stifle. They suggest that regulation might limit the exploration of beneficial AI applications and deter investment and interest in developing cutting-edge AI solutions. Instead of imposing blanket regulations, they advocate for a more measured approach that fosters innovation while addressing specific risks as they arise, ensuring that AI development is safe and beneficial without compromising its potential. This debate highlights the delicate balance between fostering innovation and ensuring public safety in the age of artificial intelligence.

In the debate over how much regulation should be enforced on artificial intelligence (AI), the perspectives of those at the helm of technology development are crucial. Data gathered from technology executives reveals a nuanced stance toward regulatory measures. A significant 79% of these leaders advocate for moderate regulation, suggesting a preference for a balanced approach that neither stifles innovation nor leaves the field unchecked. This majority viewpoint supports the idea that while some level of oversight is necessary to guide AI development safely and ethically, it should not be so restrictive as to impede technological progress.

Conclusion

As we navigate the complex cybersecurity landscape in an era shaped by rapid technological advancement, it becomes evident that Generative Artificial Intelligence (GenAI) is both a powerful ally and a formidable adversary. The insights gleaned from our survey among leading technology executives underscore the multifaceted role of GenAI in reshaping cybersecurity practices across industries and regions worldwide.

From the sophisticated tactics employed by threat actors leveraging GenAI to the transformative potential it holds for bolstering security measures, our exploration has illuminated key challenges and opportunities at the intersection of AI and cybersecurity. The emergence of AI-driven deepfakes, automated cyberattacks, and undetectable malware underscores the urgent need for proactive strategies to mitigate evolving threats.

Yet, amidst these challenges lies a realm of promise. GenAI offers cybersecurity teams invaluable tools for code analysis, vulnerability detection, and threat intelligence enhancement. By harnessing AI's capabilities, organizations can fortify their defenses, automate SOC workflows, and stay ahead of emerging threats.

As we contemplate the regulatory landscape surrounding AI, the voices of technology executives echo a call for balanced oversight that promotes innovation while safeguarding against potential risks. With 79% advocating for moderate regulation, there is a consensus that responsible governance is essential to steer AI development ethically and securely.

Collaboration, innovation, and informed regulation will be paramount in this ever-evolving cybersecurity landscape. By embracing the transformative potential of GenAI while mitigating its inherent risks, we can forge a path toward a more resilient and secure digital future. If you’re looking to learn more about these insights, reach out to our research team to get connected and see how our experts can assist you as you navigate the new age of AI.